Openstack Kuryr (Docker) Integration with Brocade VDX (AMPP)

Openstack kuryr is integrated with neutron. Kuryr provides a remote driver as per the contatiner Networking Model. Kuryr driver translates the libnetwork callbacks to appropriate neutron calls.

Here we are going to show case the integration of Kuryr with Brocade VDX Device

Setup of Openstack Plugin

Pre-requisites

Brocade Plugins require a specific version of ncclient (Net conf library). It can be obtained from the following github location.

git clone https://github.com/brocade/ncclient

cd ncclient

sudo python setup.py install

Install Brocade Plugin

git clone https://github.com/openstack/networking-brocade.git --branch=<stable/branch_name>

cd networking-brocade

sudo python setup.py install

Note: branch is an optional if the latest files(master branch) from the repository is required.

Upgrade the Database

Upgrade the database so that Brocade specific table entries are created in neutron database

neutron-db-manage --config-file /etc/neutron/neutron.conf

--config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head

Openstack Controller Configurations (L2 AMPP Setup)

Following configuration lines needs to be available in ‘/etc/neutron/plugins/ml2/ml2_conf.ini’ to start Brocade VDX Mechanism driver (brocade_vdx_vlan).

[ml2]

tenant_network_types = vlan

type_drivers = vlan

mechanism_drivers = openvswitch,brocade_vdx_ampp

[ml2_type_vlan]

network_vlan_ranges = physnet1:2:500

[ovs]

bridge_mappings = physnet1:br1

Here,

- mechanism driver needs to be set to ‘brocade_vdx_ampp’ along with openvswitch.

- ‘br1’ is the openvswith bridge.

- ‘2:500’ is the vlan range used

Following configuration lines for the VDX Fabric needs to be added to either ‘/etc/neutron/plugins/ml2/ml2_conf_brocade.ini’ or ‘/etc/neutron/plugins/ml2/ml2_conf.ini’.

If added to ‘/etc/neutron/plugins/ml2/ml2_conf_brocade.ini’ then this file should be given as config parameter during neutron-server startup.

[ml2_brocade]

username = admin

password = password

address = 10.37.18.139

ostype = NOS

physical_networks = physnet1

osversion=5.0.0

initialize_vcs = True

nretries = 5

ndelay = 10

nbackoff = 2

Here,

[ml2_brocade] - entries

- 10.37.18.139 is the VCS Virtual IP (IP for the L2 Fabric).

- osversion - NOS version on the L2 Fabric.

- nretries - number of netconf to the switch will be retried in case of failure

- ndelay - time delay in seconds between successive netconf commands in case of failure

Openstack Compute Configurations (L2 AMPP Setup)

Following configuration lines needs to be available in one of the configuration files used by openvswitch agent.

e.g /etc/neutron/plugins/openvswitch/ovs_neutron_plugin.ini

[ovs]

bridge_mappings = physnet1:br1

network_vlan_ranges = 2:500

tenant_network_type = vlan

Here,

- ‘br1’ is the openvswitch bridge.

- ‘2:500’ is the vlan range used

VDX Configurations

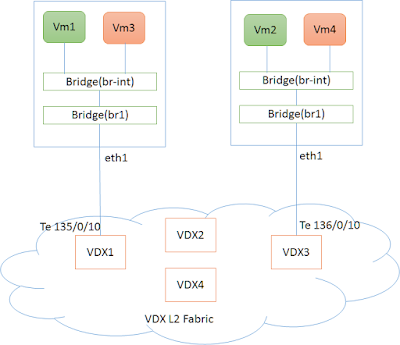

Put all the interfaces connected to compute node in port-profile mode. This is a one-time configuration. (Te 135/0/10 and Te 136/0/10 in the topology above).

sw0(config)# interface TenGigabitEthernet 135/0/10

sw0(conf-if-te-135/0/10)# port-profile-port

sw0(config)# interface TenGigabitEthernet 136/0/10

sw0(conf-if-te-136/0/10)# port-profile-port

Setup of Kuryr

Install Kuryr project on both compute and controller (each of the the host nodes)

sudo pip install https://github.com/openstack/kuryr.git

sudo pip install -r requirements.txt

sudo ./scripts/run_kuryr.sh

Update the ‘"/etc/kuryr/kuryr.conf’ to contain the following lines, kuryr driver is run in the global scope and neutron_uri is provided of the neutron server.

[DEFAULT]

capability_scope = global

[neutron_client]

# Neutron URL for accessing the network service. (string value)

neutron_uri = http://10.37.18.158:9696

Restart both the remote driver(stop the one started earlier in the above step) and the docker service

sudo ./scripts/run_kuryr.sh

sudo service docker restart

Docker CLI command

Create Network

Create a Docker Network called “black_network” on the docker swarm having the subnet 92.16.1.0/24

root@controller:~# docker_swarm network create --driver kuryr --subnet=92.16.1.0/24 --gateway=92.16.1.1 black_network

2e36e5ac17f2d4a3534678e58bc4920dbcd8653919a83ad52cbaa62057297a84

This creates a neutron network with segmentation id (vlan) 43

root@controller:~# neutron net-show kuryr-net-2e36e5ac

+---------------------------+----------------------------------------------------+

| Field | Value |

+---------------------------+----------------------------------------------------+

| admin_state_up | True |

| availability_zone_hints | |

| availability_zones | nova |

| created_at | 2016-05-12T11:16:55 |

| description | |

| id | 23beebb7-c4ec-41be-a12a-96f897b1dace |

| ipv4_address_scope | |

| ipv6_address_scope | |

| mtu | 1500 |

| name | kuryr-net-2e36e5ac |

| port_security_enabled | True |

| provider:network_type | vlan |

| provider:physical_network | physnet1 |

| provider:segmentation_id | 43 |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | 5072db88-54be-4be0-a39b-f52b60a674ef |

| tags | kuryr.net.uuid.uh:bcd8653919a83ad52cbaa62057297a84 |

| | kuryr.net.uuid.lh:2e36e5ac17f2d4a3534678e58bc4920d |

| tenant_id | 1035ac77d5904b0184af843e58c37665 |

| updated_at | 2016-05-12T11:16:56 |

+---------------------------+----------------------------------------------------+

This creates a port-profile on the switch with appropriate parameters.

sw0(config)# do show running-config port-profile openstack-profile-43

port-profile openstack-profile-43

vlan-profile

switchport

switchport mode trunk

switchport trunk allowed vlan add 43

!

!

port-profile openstack-profile-43 activate

Create Docker Containers

Create a docker container on both the nodes in the docker_swarm

root@controller:~# docker_swarm run -itd --name=black_1 --env="constraint:node==compute" --net=black_network busybox

8079c6f22d8985307541d8fb75b1296708638a9150e0334f2155572dba582176

root@controller:~# docker_swarm run -itd --name=black_2 --env="constraint:node==controller" --net=black_network busybox

f8b4257abcf39f3e2d45886d61663027208b6596555afd56f3e4d8e45d641759

sw0(config)# do show port-profile status

Port-Profile PPID Activated Associated MAC Interface

UpgradedVlanProfile 1 No None None

openstack-profile-43 2 Yes fa16.3e2b.38b6 Te 135/0/10

fa16.3ebf.796c Te 136/0/10

fa16.3ed6.7f0b Te 135/0/10

Ping between Containers

root@controller:~# docker_swarm attach black_1

/ # ifconfig

eth0 Link encap:Ethernet HWaddr FA:16:3E:BF:79:6C

inet addr:92.16.1.2 Bcast:0.0.0.0 Mask:255.255.255.0

inet6 addr: fe80::f816:3eff:febf:796c/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:52 errors:0 dropped:14 overruns:0 frame:0

TX packets:9 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:5956 (5.8 KiB) TX bytes:738 (738.0 B)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

/ # ping 92.16.1.3

PING 92.16.1.3 (92.16.1.3): 56 data bytes

64 bytes from 92.16.1.3: seq=0 ttl=64 time=1.825 ms

64 bytes from 92.16.1.3: seq=1 ttl=64 time=0.819 ms

64 bytes from 92.16.1.3: seq=2 ttl=64 time=0.492 ms

64 bytes from 92.16.1.3: seq=3 ttl=64 time=0.458 ms

64 bytes from 92.16.1.3: seq=4 ttl=64 time=0.489 ms

64 bytes from 92.16.1.3: seq=5 ttl=64 time=0.480 ms

64 bytes from 92.16.1.3: seq=6 ttl=64 time=0.438 ms

64 bytes from 92.16.1.3: seq=7 ttl=64 time=0.501 ms